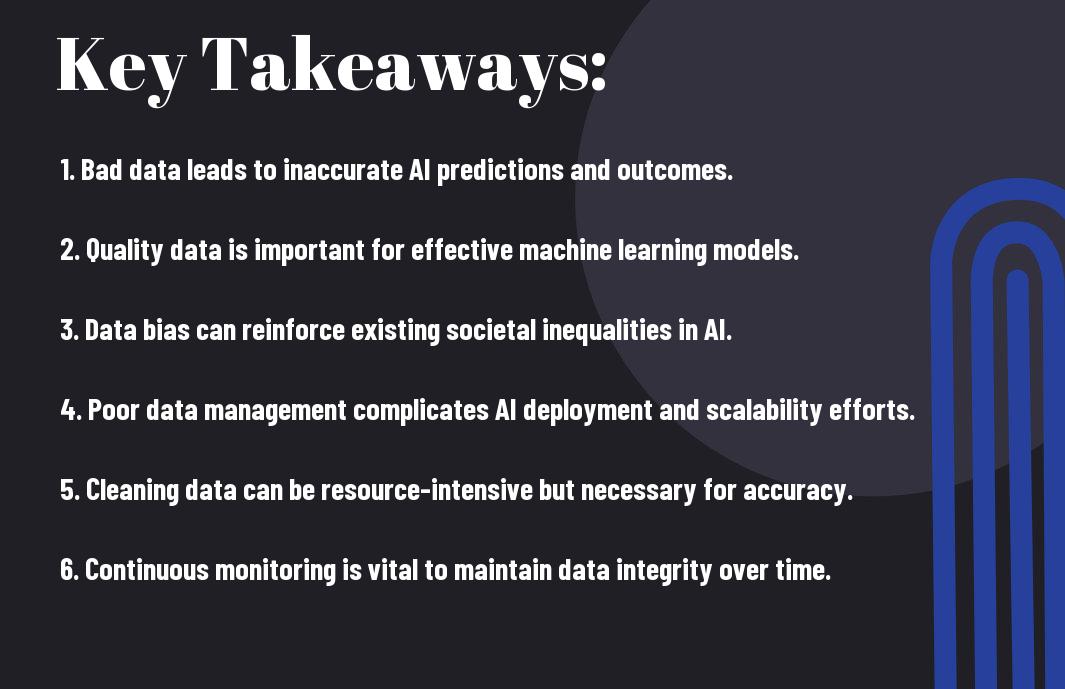

There’s a growing concern in Artificial Intelligence that the integrity of your data can determine the success or failure of AI systems. As you navigate the complexities of AI implementation, it’s crucial to understand how bad data—whether it’s inaccurate, incomplete, or biased—can lead to flawed algorithms and misguided outcomes. This informative article will guide you through the pitfalls of poor data quality and highlight the far-reaching consequences that can arise from neglecting this imperative component of intelligent systems.

The Importance of Data Quality

For any Artificial Intelligence (AI) system to function effectively, the quality of data is paramount. Good quality data serves as the bedrock for AI technologies, providing the training models with accurate, relevant, and timely information. When you base your AI innovations on high-quality data, you empower them to make informed decisions, predict trends, and generate insights that are more reliable. Conversely, should the data feeding your systems be flawed or distorted, the repercussions may ripple through all layers of the AI model, resulting in erroneous outputs that could misinform or mislead you in your applications.

Data as the Foundation of AI

Foundation plays a critical role in the development and deployment of Artificial Intelligence models. You must understand that data acts as the foundational element for any AI system, significantly influencing its learning process and operational efficiency. By providing diverse and high-quality data, you allow the AI models to recognize patterns and make predictions based on information that accurately reflects the real world. If your data lacks diversity or is riddled with inaccuracies, your AI will struggle to adapt to new situations, limiting its potential and hindering your desired outcomes.

Furthermore, the training phase of AI demands meticulous attention to the quality of the input data. When you invest time in refining your datasets, you are not merely enhancing the output of your AI; you are also ensuring the sustainability of its performance over time. Good quality data allows for more robust model training, enabling the AI to generalize better and perform effectively across various scenarios. This consistency and reliability will be a massive asset to you as you deploy these technologies in dynamic environments.

Lastly, the relationship between data quality and AI’s performance is not static; it evolves over time. As you gather more data, continual quality assessments become vital. You have the opportunity to conduct regular audits and refine your datasets to ensure that they still meet the required standards for your AI applications. This iterative process fortifies the foundation upon which your AI is built, ensuring it remains relevant and capable of delivering valuable insights even as contexts change.

1. Explain the significance of data integrity in AI applications.

2. How does data quality affect the training of machine learning models?

3. Can you provide examples of high-quality versus low-quality data in AI?

4. What are the best practices for ensuring data quality in AI projects?

5. Discuss the relationship between data diversity and AI performance.

The Consequences of Ignoring Data Quality

With a disregard for data quality, you expose yourself to a myriad of risks that can compromise the effectiveness of your AI implementations. When bad data enters the equation, the models’ learning becomes skewed, potentially resulting in biased or inaccurate outcomes. This is not merely an abstraction; such consequences manifest in real-world scenarios, leading to poor decisions, increased operational costs, and a decline in trustworthiness of your AI systems. Furthermore, the implications stretch beyond immediate performance, as damage to your brand’s reputation may ensue, particularly if the AI outputs impact external stakeholders.

Moreover, bad data can perpetuate itself within AI systems, creating a vicious cycle that is hard to break. As your AI learns from this flawed data, the issues compound over time, rendering troubleshooting efforts increasingly complex. You may find that the longer bad data persists, the more entrenched the errors become, making it challenging to recalibrate your models or adjust their parameters to rectify the foundational problems that lead to miscalculations.

In practical terms, by ignoring data quality, you vitally gamble with your strategic decisions, sometimes with irreversible consequences. This means heightened risks not only manifest in financial losses but can also affect compliance with regulations, particularly in industries demanding stringent data governance. The long-term ramifications can jeopardize not just individual projects, but the overall integrity of AI initiatives within your organization.

1. What are the most common pitfalls in data management for AI?

2. Explain how poor data quality can lead to bias in AI algorithms.

3. What strategies can be implemented to mitigate the risks associated with bad data?

4. Can you give examples of industries severely impacted by low data quality?

5. How can businesses recover from the fallout of poor data quality in their AI systems?

The consequences of ignoring data quality cannot be overstated; it serves as a silent architect shaping the success or failure of your AI initiatives.

1. Discuss the immediate operational impacts of using bad data in AI.

2. How does bad data affect consumer trust in AI solutions?

3. What role does continuous data quality assessment play in AI success?

4. Analyze the long-term effects of compromised data integrity on AI projects.

5. What frameworks can be used for effective data quality management?

Sources of Bad Data

You may not realize it, but the quality of data used in Artificial Intelligence (AI) systems is crucial for their success. Bad data can originate from various sources, and understanding these sources is vital for improving data integrity. The impact of bad data often cascades through the entire AI modeling process, leading to flawed conclusions and erroneous predictions. Some common sources include human error and bias, incomplete or inconsistent data, and outdated or obsolete information, each playing a critical role in skewing the effectiveness of AI applications.

Here are some ChatGPT prompt samples related to this subsection:

1. Discuss the primary sources of bad data in Artificial Intelligence.

2. Explain how human error contributes to bad data in AI systems.

3. Analyze the effects of incomplete or inconsistent data on AI outcomes.

4. What are the consequences of using outdated information in AI?

5. Describe the challenges posed by bias in data collection.

Human Error and Bias

On any given day, you might encounter numerous instances of human error—whether it’s a typo in a dataset or the misinterpretation of data entry guidelines. The influence of humans doesn’t stop at data entry; it extends to how datasets are curated and selected for training AI models. A dataset filled with errors or biased inputs compromises the entire AI system’s ability to learn effectively, ultimately resulting in misguided outputs. In many cases, biases can manifest from the subjective choices made by individuals during the data collection process, which can perpetuate systemic stereotypes or discrimination. AI systems trained on such biased data will reflect and reinforce these biases, further exacerbating existing inequalities.

Additionally, human biases can inadvertently influence the selection of data attributes deemed relevant for AI training. Suppose an AI model is designed to identify potential criminals based primarily on historical arrest data. If that arrest data is laden with racial bias, the model learns to make predictions aligned with those prejudices, leading to harmful repercussions. This illustrates the cascading effect of human error and bias—potentially unwittingly selecting harmful data that ultimately shapes AI’s operations and can disproportionately impact vulnerable communities.

Moreover, the use of biased data leads to a greater issue of trustworthiness in AI applications. When errors or biases arise, stakeholders—from developers to end-users—might question the integrity and fairness of AI outputs. This has implications for large-scale implementations, like AI in law enforcement or hiring systems. If users lose trust in AI due to its flawed data, they may be less inclined to adopt such technologies in the future, stifling the advancement of potentially beneficial AI applications.

Here are some ChatGPT prompt samples related to this subsection:

1. Examine how human errors affect data quality in AI.

2. Explore the role of bias in data collection and its implications for AI.

3. What strategies can be used to mitigate human error in data handling?

4. Discuss the long-term consequences of biased AI systems.

5. Identify steps to ensure fair data collection practices in AI development.

Incomplete or Inconsistent Data

Data is the lifeblood of artificial intelligence; however, the mere presence of data does not guarantee its quality. Incomplete or inconsistent data can dramatically hinder the learning process of AI systems. Imagine an AI model designed for healthcare predictions, relying on patient data that is filled with gaps—incomplete medical histories, missing test results, or inconsistent definitions of health metrics. These issues can lead to significant distortions in the model’s understanding of reality, resulting in faulty predictions that can have dire consequences for patient care. You may find that AI models trained on incomplete datasets often show a tendency to overfit, i.e., to become excessively specialized in the data they have been given, which may not generalize well to new information.

On a broader scale, inconsistent data stemming from different sources, measurement methods, or data entry standards can further complicate the situation. Inconsistencies can confuse AI algorithms, resulting in mixed signals during training phases which may lead to unstable models. If a dataset includes measurements with varied units—say, pounds versus kilograms—your AI could generate inaccurate outputs simply based on how the data was presented. This is particularly concerning in applications requiring precision, such as financial modeling or scientific research, where even slight discrepancies could yield flawed results.

To better navigate these pitfalls, it can be beneficial for developers and data scientists to establish rigorous data management practices. You can focus on implementing thorough data validation techniques, such as anomaly detection to catch inconsistencies and regular audits to identify gaps in the datasets over time. Having rigorous checks in place ensures that the AI systems you operate on are equipped with high-quality data, ultimately leading to more accurate and trustworthy predictions.

Here are some ChatGPT prompt samples related to this subsection:

1. Analyze the impact of incomplete data on AI decision-making processes.

2. What are the common causes of data inconsistencies in AI projects?

3. Suggest methods for verifying the completeness of datasets.

4. Discuss the importance of standardization in data collection.

5. How does inconsistent data affect the reliability of AI solutions?

Data quality is imperative, and ensuring completeness and consistency should be a priority in your data management strategies. Incomplete or inconsistent data raises significant concerns for AI systems, compromising the robustness of their insights and predictions. The ramifications of neglecting this aspect can be far-reaching, ultimately leading to adverse impacts on industries ranging from healthcare to finance.

Here are some ChatGPT prompt samples related to this subsection:

1. Elaborate on the consequences of incomplete data in AI applications.

2. How can AI systems be trained to handle inconsistencies in data?

3. What are real-world examples where inconsistent data led to failures in AI?

4. Describe best practices for maintaining data consistency in AI projects.

5. How does the completeness of data influence model accuracy?

Outdated or Obsolete Information

The data landscape is continually evolving, and it is crucial to keep AI systems updated with the latest information. The rapid pace of technological change means that using outdated data can lead to misguided modeling decisions and ineffectual AI applications. When data loses its relevance, you risk operating under false assumptions. Take, for instance, a machine learning algorithm trained on economic trends from ten years ago; it may not effectively capture recent shifts in consumer behavior or economic indicators, leading to business strategies that miss the mark. This scenario clearly illustrates how relying on stale data affects an AI system’s adaptability to the present conditions.

Moreover, outdated information can skew predictive analytics, causing organizations to make decisions based on a distorted reality. If you consider an AI model used for predicting demand for a product that underwent a significant redesign, using pre-redesign data would lead to inaccurate forecasts, potentially resulting in overproduction or underproduction. Such discrepancies threaten not just profitability but also a brand’s reputation, demonstrating the importance of employing the most current data available.

Additionally, the challenge of outdated data often goes hand in hand with a lack of ongoing data governance. You might find that organizations frequently underestimate the imperative task of reviewing and refining datasets to eliminate outdated inputs. Improving processes for continuously refreshing data sources and revisiting the relevance of existing data will help keep AI models responsive to changes, enhancing their effectiveness.

Here are some ChatGPT prompt samples related to this subsection:

1. How does outdated information impact AI prediction models?

2. Explore the challenges organizations face in maintaining up-to-date datasets.

3. Discuss strategies for ensuring data freshness in AI applications.

4. What are the implications of relying on obsolete information in decision-making?

5. Describe the importance of continuous data review in AI systems.

Bias is often compounded when outdated information is present. If previous datasets reflect systemic biases, and these datasets remain unchanged, such biases will continue to impact AI outputs. This underscores the importance of eliminating outdated information to create a more equitable AI landscape, ensuring the generated insights serve all demographics fairly.

Here are some ChatGPT prompt samples related to this subsection:

1. Discuss the relationship between outdated data and bias in AI.

2. How can regular updates mitigate biases in AI systems?

3. What challenges do organizations face in eliminating outdated information?

4. Explore the impact of historical biases on current AI datasets.

5. How does the age of data influence fairness in AI predictions?

The Impact on AI Systems

Despite the widespread enthusiasm for artificial intelligence (AI), it’s important to understand that the quality of data significantly influences the systems’ performance and reliability. Poor data can lead to catastrophic failures in AI applications, especially when they are integrated into critical sectors like healthcare, finance, or transportation. When you rely on AI systems powered by flawed data, you place your trust in a technology that is potentially misleading or even harmful, which underscores the importance of rigorous data management and preprocessing. The implications of bad data can reverberate through your organization, affecting decision-making, operational efficiency, and stakeholder confidence.

1. Discuss how incorrect data can lead to disastrous decisions in AI systems.

2. Explore the role of data quality control in developing trustworthy AI.

3. Analyze case studies where bad data caused significant AI failures.

Decreased Accuracy and Reliability

Accuracy is paramount in any AI system, and bad data can significantly decrease this vital metric. When the training sets used to teach an AI model are riddled with inaccuracies, the resulting model becomes unreliable. If you deploy an AI that is based on erroneous data, the predictions it generates may not reflect reality. This can misguide your strategies and lead to poor outcomes, especially in high-stakes environments like predictive healthcare, where incorrect data can influence life-or-death decisions. Moreover, less accurate models require constant recalibration, which can drain your resources and time.

In any system, reliability is closely tied to accuracy. As you continue to work with an AI model that was trained on flawed data, you may start to notice performance issues that compound over time. If the underlying data does not represent the diverse cases the model will encounter, you will likely experience higher variability in results. This can cause your team to question the system’s integrity, leading to hesitation in using AI-generated insights for critical decisions. Consequently, it diminishes your users’ trust in the technology, which is a crucial element for successful AI implementation.

Additionally, the repercussions of decreased accuracy can extend beyond immediate operational challenges, affecting customer satisfaction and brand reputation. If your AI systems are generating invalid outputs, the end-users of your product or service may become frustrated. You risk losing clients who depend on accurate and reliable results. In this fast-paced technology landscape, reputation can make or break your business, emphasizing the necessity of high-quality data for maintaining customer loyalty and a competitive edge.

1. Explain the connection between data quality and model performance accuracy.

2. Investigate consequences of using AI models with low accuracy in business.

3. Suggest methodologies for improving the reliability of AI systems.

Unfair or Discriminatory Outcomes

An often-overlooked consequence of bad data in AI systems is the potential for generating unfair or discriminatory outcomes. When datasets contain bias, whether through unintentional human programming or incomplete data collection, AI models can perpetuate societal inequalities. As a user, you might be inadvertently endorsing these biases when you leverage AI tools for hiring, lending, or law enforcement, where even slight inaccuracies can skew results drastically. It’s crucial for you to scrutinize the data feeding into your AI systems to guard against these harmful outcomes.

Biases in the data are often reflective of historical inequalities, and without being mindful, AI can reinforce and even exacerbate those disparities. If your AI system is trained predominantly on data from a specific demographic, its outputs could unfavorably target or exclude others. Such discrimination doesn’t just pose ethical issues; it can also lead to significant legal repercussions and damage to your organization’s reputation. By ignoring the biases within your datasets, you tacitly endorse discrimination, which can have far-reaching implications for both your users and broader society.

Moreover, achieving fairness in AI outcomes requires a proactive approach to data curation. You need to implement robust evaluation frameworks to identify and address discriminatory patterns in your datasets. Relying solely on algorithms to flag issues may not suffice; human oversight is necessary to understand context and societal implications. You must adopt ethical data management practices, fostering an inclusive approach that actively works to eliminate bias and promote fairness in AI systems that affect people’s lives.

1. Discuss how bias in training data can lead to discriminatory AI outcomes.

2. Explore methodologies for identifying and correcting biases in datasets.

3. Analyze the societal implications of unfair AI decision-making.

Outcomes from flawed AI systems can also lead to an increased risk of errors and failures. Poor-quality data inputs are often the root cause of these inaccuracies. When you deploy an AI system without properly vetting the data it draws from, you expose yourself to the risk of systemic errors. Each erroneous data point can compound exponentially as the AI processes multiple layers of information, thus perpetuating mistakes. You might find yourself encountering unexpected issues that could have been easily avoided had the data been meticulously validated before deployment.

The errors arising from bad data can range from benign to catastrophic. For example, in fields such as finance, faulty predictive models may incorrectly assess risks, resulting in substantial financial losses. In healthcare, decisions based on misleading data could lead to misdiagnoses, jeopardizing patient safety. The consequences of such failures escalate when organizations rely heavily on AI for core functions, which can result in operational disruptions and a shake-up of trust among customers and stakeholders. As a user, failing to address data quality issues invites a precarious operational landscape.

Furthermore, regular audits of your data hygiene and systematic checks of your AI outputs are important in minimizing the risk of errors and failures. You should also consider training models on diverse, high-quality datasets that are reflective of real-world scenarios. This not only helps improve the robustness of your outcomes but also enhances the reliability of your AI systems, thus ensuring that you can confidently navigate the complexities of an increasingly data-driven world.

1. Elaborate on common error types stemming from misleading datasets.

2. Propose strategies to mitigate risks associated with bad data in AI.

3. Discuss the importance of data audits for risk management in AI applications.

Discriminatory outcomes in AI systems can also perpetuate existing societal inequalities, which puts additional pressure on organizations that deploy this technology. When your models yield biased results—perhaps favoring one demographic over another—you not only contribute to unfair treatment but also risk potential backlash from consumers and advocacy groups. The perception of discrimination can drive consumers away, eroding trust in your brand. You must remain vigilant and actively engage in practices that promote equitability, thus ensuring that your organization’s AI efforts can contribute positively to social justice rather than hindrance.

1. Investigate the long-term effects of discriminatory AI practices on society.

2. Advise organizations on how to foster an equitable AI ecosystem.

3. Develop guidelines for responsible AI practice focused on fairness.

Reliability in AI systems necessitates a commitment to continual improvement and rigorous data management. You should maintain an ongoing process that ensures data integrity, continuously monitor AI outputs for signs of degradation, and be prepared to iterate the model frequently. By creating a culture that values data cleanliness, you bolster the health of your AI systems and empower stakeholders to rely on their outputs confidently. A proactive approach not only benefits your operations but can place you ahead of competitors who may still underestimate the long-term value of good data.

Data Preprocessing and Cleaning

All data used in artificial intelligence (AI) systems is not created equal. Ensuring that the data used is clean, consistent, and relevant is vital for the accuracy and reliability of AI models. Data preprocessing and cleaning serve as the foundational steps in your AI pipeline, where raw data is transformed into a format suitable for analysis. This process often involves filtering out irrelevant information, handling missing values, correcting inconsistencies, and transforming data into a suitable format. Bad data can severely impede the performance of machine learning algorithms, leading to misleading results and subpar decision-making. Thus, effective preprocessing can save you time and resources while improving your AI outcomes.

1. Suggest effective methods for data preprocessing in AI.

2. How do data cleaning techniques differ for various types of data?

3. Explain the importance of data normalization.

4. What tools can assist in the data cleaning process?

5. Discuss common pitfalls in data preprocessing.

The Role of Data Scientists and Engineers

Preprocessing tasks are primarily undertaken by data scientists and engineers, who play a crucial role in ensuring that the quality of data meets the rigorous demands of AI algorithms. These professionals are trained to recognize patterns and anomalies in data sets, employing various statistical techniques to assess the data’s integrity. Your ability to leverage their expertise can greatly enhance the effectiveness of your AI initiatives. Data scientists typically focus on analyzing and interpreting data, while engineers often concentrate on the architectural aspects, ensuring that the data pipelines are efficient and scalable.

In practice, these teams work closely together to implement data validation protocols and best practices for cleaning. As a data scientist or engineer, you ought to develop a keen eye for detail, as overlooking minor discrepancies can lead to major inaccuracies further down the line. A collaborative approach is vital; while data scientists may identify trends and propose models, engineers will ensure that data flows smoothly throughout the pipeline. This teamwork is vital for maintaining a high standard of data quality across all AI projects.

Ultimately, the seamless integration of data scientists and engineers is pivotal in safeguarding your AI systems against the pitfalls of bad data. This cross-functional collaboration forms the backbone of successful AI model development, allowing for a stronger focus on innovative strategies and solutions. As you continue to build and optimize your AI frameworks, consider how strategic engagement with your data team can improve overall data health and model performance.

1. Explain the collaboration between data scientists and engineers.

2. How can data scientists predict potential data issues?

3. What qualifications should data professionals possess?

4. Describe the role of a data engineer in AI projects.

5. What challenges do data scientists face during preprocessing?

Techniques for Identifying and Correcting Errors

Preprocessing your data not only involves cleaning it but also determining whether the data is accurate and usable. There are various techniques that data scientists employ to identify and correct errors. First, statistical analyses such as mean, median, and standard deviation can highlight outliers or aberrant data points that could skew results. Visualization tools are another critical technique, as they can reveal data patterns that may not be immediately apparent, allowing you to uncover discrepancies that necessitate correction.

Moreover, automated checks and scripts can be implemented to evaluate data quality at scale. These scripts can flag missing or null values, detect duplicates, and confirm that data entries adhere to expected formats. Additionally, domain knowledge is invaluable; understanding the context surrounding your data enables you to evaluate its validity more comprehensively. Techniques such as cross-validation—comparing your data against established standards or external datasets—can also help in identifying potential errors, ensuring a high-quality data foundation for your AI systems.

Engaging in comprehensive error identification and correction techniques can yield significant dividends, streamlining your model training process. When errors are rectified early in the data pipeline, the risk of compounding issues is reduced, bolstering the reliability of your AI models and their predictive accuracy. A proactive approach to data integrity helps maintain a competitive edge in any AI initiative.

1. What are the most common techniques for data error detection?

2. How can you effectively visualize data to find discrepancies?

3. Why is domain knowledge important in identifying errors?

4. Discuss the role of automation in data quality assurance.

5. How can machine learning aid in error correction?

With numerous techniques available for identifying and correcting errors, you must prioritize these methods to ensure data quality. These approaches not only enhance the reliability of your datasets but also facilitate a more accurate model training process. Engaging in thorough data cleaning practices can create a positive feedback loop, improving the overall robustness of your AI solutions. Embracing new technologies and analytical methods can further supplement your data validation efforts, fostering a culture of precision and proactive error management within your organization.

1. Describe an innovative method for data error correction.

2. How can AI be utilized to enhance data cleaning processes?

3. What role does machine learning play in identifying errors?

4. Give examples of error-correcting algorithms.

5. How can real-time data validation be implemented?

The Importance of Data Validation and Verification

It is paramount that the quality of data is not only ensured through the processes of cleaning and preprocessing but also maintained through rigorous validation and verification techniques. Data validation refers to the measures taken to confirm that data meets specific criteria before it is used in AI applications. This crucial step can prevent the incorporation of erroneous data into machine learning models, which in turn directly affects the integrity of outputs produced by these systems. By validating your data, you allow for a higher level of confidence in its correctness and applicability.

Verification, on the other hand, serves as a back-end check where data is cross-referenced against reliable benchmarks to confirm its authenticity and accuracy. Leveraging both validation and verification practices creates a robust safeguard against data inaccuracies that could lead to poor AI performance. Without a commitment to these practices, you risk engaging in processes that are built upon faulty foundations, leading to incorrect inferences, misguided strategies, and ultimately a failure in your AI objectives.

Therefore, prioritizing validation and verification in your data preprocessing efforts sets the stage for successful AI outcomes. Committing to these methods will not only build trust in data-driven strategies but also position your organization for sustained success in deploying AI technologies. By integrating these standard practices into your workflow, you will equip your projects with a robust framework that sets a standard for high-quality, actionable insights from your data.

1. Explain how data validation differs from data verification.

2. Why is data integrity vital in AI?

3. What processes can ensure continuous data validation throughout a project?

4. How can organizations enforce data validation policies?

5. Discuss case studies that highlight the importance of data verification.

Identifying the importance of data validation and verification can dramatically influence your AI success. Knowing when and how to apply these practices can prevent potential pitfalls, ensuring that your AI models are built on a solid foundation of accurate data. This foundational integrity translates not only to improved accuracy in outcomes but also to a greater trust in the data-driven decisions your organization will make.

1. How do mistakes in validation processes affect AI results?

2. What role do governance policies play in data validation?

3. Describe the impact of data validation on user trust in AI.

4. How does ongoing validation contribute to model performance?

5. What tools exist for implementing effective data validation?

The Effects on Machine Learning

Many individuals and organizations are increasingly leveraging artificial intelligence (AI) to drive decision-making and operational efficiencies. However, the success of these machine learning models is intricately linked to the quality of the data they are trained on. Poor quality data can lead to misleading insights, biased outcomes, and suboptimal performance. This section will dissect the effects of bad data on machine learning processes, emphasizing the importance of data integrity in achieving reliable AI systems.

1. What are the consequences of using bad data in machine learning?

2. Can bad data lead to biased AI models?

3. How can you ensure the quality of data used in AI training?

4. What steps can be taken to mitigate bad data in machine learning?

5. How does data quality impact AI-driven decision-making?

Poisoning Attacks and Data Tampering

An increasingly concerning challenge in machine learning is the phenomenon of data poisoning and tampering. These attacks involve the deliberate introduction of incorrect or misleading data points into the training dataset, ultimately skewing the resulting AI model’s outputs. Such malicious activities can take various forms—including the injection of noise, the mislabeling of data, or even the alteration of existing data entries. The consequences of these actions can be dire; not only can they degrade the performance of the model, but they can literally change the fundamental behavior of the AI system, leading to decisions that can be harmful or unjust.

Furthermore, when a machine learning model is trained on poisoned data, the risks extend beyond performance issues. The integrity of the AI itself can come into question, creating a loss of trust not just among stakeholders but also in public perception. This erosion of trust can have far-reaching consequences, particularly in domains like healthcare or finance, where decisions made by AI systems can have real-world implications for individuals’ health, safety, and financial wellbeing. To combat this growing threat, organizations must prioritize the development of robust data validation techniques and implement rigorous monitoring, ensuring their datasets remain secure and free from malicious interference.

Moreover, the field of adversarial machine learning is emerging as a vital area of research aimed at understanding and developing defenses against poisoning attacks and data tampering. As models become increasingly sophisticated and integrated into daily life, the imperative to safeguard them from corrupt data becomes paramount. Without effective strategies to address these vulnerabilities, the risk of compromised AI systems will only increase, necessitating vigilance and proactive measures to protect data integrity at every stage of the machine learning lifecycle.

1. What are common methods used in data poisoning attacks?

2. How can organizations detect data tampering in their datasets?

3. What strategies can be implemented to protect machine learning systems from poisoning?

4. Can AI models recover from the effects of data tampering?

5. How do poisoning attacks impact different types of machine learning algorithms?

Overfitting and Underfitting Models

As you probe deeper into the complexities of machine learning, two critical concepts emerge: overfitting and underfitting. These issues often arise due to the quality of the training data used in developing your models. Overfitting occurs when a model learns too much detail from the training data, capturing noise along with the underlying patterns. This results in a model that performs exceptionally well on training data but fails to generalize to unseen data, yielding poor performance in real-world applications. In contrast, underfitting happens when the model is too simplistic to capture the underlying architecture of the data, leading to equally dismal results. Both scenarios highlight the significance of a well-structured and high-quality dataset.

An necessary aspect of tackling overfitting and underfitting lies in understanding the balance of complexity within your models concerning the training datasets. Proper methods such as cross-validation can help you assess whether your model generalizes well or is prone to either extreme. Moreover, incorporating techniques like regularization can aid in preventing overfitting by penalizing overly complex models. Ultimately, your goal must be to achieve a well-calibrated model that harmonizes with your data, ensuring it provides accurate predictions across a range of inputs.

Furthermore, as you work to refine your models, continuously monitoring performance metrics becomes crucial. If you notice signs of overfitting or underfitting, adjusting your dataset—whether through data augmentation, better feature selection, or enhancing the quality of input data—can significantly improve model performance. Balancing these tendencies requires a nuanced understanding of your data as well as the tools at your disposal, underscoring the pivotal role that quality data plays in nurturing effective machine learning solutions.

1. How can you recognize overfitting in your machine learning model?

2. What techniques can be utilized to prevent overfitting?

3. What are the implications of using low-quality data on model performance?

4. How does feature selection impact overfitting or underfitting?

5. Is it possible to tune a model to avoid both overfitting and underfitting simultaneously?

Tampering with the training dataset can exacerbate the challenges of overfitting and underfitting by skewing the data distribution. As a consequence, your model may learn to predict patterns based on flawed or unrepresentative samples, further distancing itself from the intended outcomes. This reinforces the critical need for rigorous data validation processes and the importance of selecting a diverse and well-rounded dataset when developing AI applications.

1. What strategies can help mitigate data tampering risks in machine learning?

2. How does data diversity influence the performance of AI models?

3. What tools can you use to evaluate model bias related to overfitting or underfitting?

4. How do training and validation datasets affect overall model accuracy?

5. What role does domain knowledge play in tackling overfitting and underfitting?

The Consequences of Unbalanced Datasets

For instance, when your training data is unbalanced—meaning one class has significantly more samples than another—the performance of your machine learning model can become skewed. This imbalance often leads to models that are biased towards the majority class, exacerbating issues of fairness and accuracy. Such imbalances can result in a model that fails to correctly identify or predict outcomes for the minority class, which, in certain applications—like fraud detection or medical diagnosis—can have serious repercussions. In simple terms, a model trained on imbalanced data is less adept at identifying the rare events that you might be most interested in.

The consequences of unbalanced datasets extend beyond individual model performance; they can also impact broader decision-making processes. Stakeholders relying on AI-generated insights may not realize the limitations posed by unbalanced data, leading to flawed strategies based on incomplete or misleading information. As organizations pivot towards data-driven decision-making, the responsibility falls on data scientists and engineers to ensure that the datasets they use are representative of the real world and truly reflect the complexities of the issues at hand. This effort involves not only applying statistical techniques to correct for imbalance but also fostering awareness around the impact of data distribution on AI outcomes.

Ultimately, your approach to addressing unbalanced datasets should prioritize fairness, transparency, and inclusivity. Techniques such as resampling, synthetic data generation, or utilizing algorithms that adjust for imbalances can enhance your models’ performance across all classes. By recognizing and addressing the limitations imposed by unbalanced data, you position your AI systems to perform more accurately and equitably, ensuring they serve the needs of all stakeholders and produce trustworthy results.

1. What are common methods for handling unbalanced datasets in machine learning?

2. How does class imbalance affect model evaluation metrics?

3. Can you explain the concept of synthetic data generation?

4. What are the consequences of bias in AI systems related to unbalanced datasets?

5. How can you effectively communicate the risks of unbalanced data to stakeholders?

Overfitting and underfitting are exacerbated by issues related to unbalanced datasets, as your model may again fail to represent the minority class effectively. This cascade of challenges can lead to a compounding effect on your model’s performance and the overall reliability of the AI system. Hence, focusing on achieving balanced datasets is crucial to ensuring well-rounded and robust outcomes in your machine learning initiatives.

1. How can you identify when your dataset is unbalanced?

2. What impacts does removing outliers have on dataset balance?

3. How does model architecture influence handling of unbalanced datasets?

4. What is the impact of transfer learning on unbalanced data?

5. How can collaborative efforts improve dataset quality and balance?

Real-World Examples of Bad Data

Now, when discussing the impact of bad data on artificial intelligence (AI), it is imperative to consider real-world examples that illustrate how data imperfections can lead to significant consequences. In various domains such as healthcare, finance, and technology, the implications of utilizing flawed data can result in not only inefficiencies but also societal challenges. The following subsections will explore several notable instances of bad data, each shedding light on the critical importance of data quality in AI systems.

- Discuss the implications of biased facial recognition systems.

- Provide examples of flawed healthcare diagnostics due to bad data.

- Explore the ramifications of inaccurate financial predictions.

- Explain how bad data affects AI decision-making processes.

- Discuss strategies to mitigate the impact of bad data on AI.

Biased Facial Recognition Systems

One of the most prominent examples of bad data is found in biased facial recognition systems. These systems are increasingly relied upon in myriad applications, from law enforcement to personal device security. However, research has shown that the datasets used to train these facial recognition algorithms often lack diversity, disproportionately representing lighter-skinned individuals, while underrepresenting people of color and women. This imbalance can lead to higher error rates for marginalized groups, resulting in wrongful identifications or rejections. The implications of such biases are grave, as they not only foster discriminatory practices but also erode public trust in technology that is supposed to enhance safety and efficiency.

The fallout from biased facial recognition extends beyond individual cases of misidentification. For instance, wrongful arrests stemming from inaccurate facial recognition results can have devastating ramifications for those affected. These incidents can damage reputations, lead to emotional trauma, and jeopardize future opportunities within employment and social standing. You should consider how AI systems rooted in flawed data can exacerbate existing societal inequalities, perpetuating cycles of disadvantage. As a society increasingly relies on AI technologies, addressing these biases becomes imperative to ensuring fairness and justice.

To mitigate the effects of bad data in facial recognition systems, some organizations are beginning to adopt more comprehensive data collection practices, striving for greater inclusivity in their datasets. Enhanced transparency in how these systems are trained and tested is also a crucial step toward accountability. By prioritizing diverse data sources and implementing rigorous auditing procedures, you can help foster a more equitable landscape for AI technologies, where everyone’s identity is fairly represented and accurately recognized.

- Analyze the impact of biased facial recognition systems on society.

- Discuss the legal implications of wrongful identifications in AI.

- Explore solutions to reduce bias in AI training datasets.

- Examine case studies of facial recognition failures.

- Investigate how technology can enhance diversity in AI systems.

Flawed Healthcare Diagnostics

Diagnostics in healthcare provide another critical example of how bad data can undermine the capabilities of AI systems. When AI algorithms are applied to diagnostic tools, the accuracy of their assessments heavily relies on the quality and breadth of the data they are trained on. However, a considerable amount of existing medical data is plagued with inaccuracies, outdated information, or lacks representation from various demographics. This flaw can lead to diagnostic errors, resulting in misdiagnosis, improper treatment plans, and ultimately adverse patient outcomes. By failing to reflect the real experiences and conditions of diverse patient populations, these AI systems can lead to significant health disparities.

Moreover, the ramifications of flawed healthcare diagnostics are profound and wide-reaching. For instance, consider a scenario where an AI system incorrectly assesses a rare disease due to a lack of adequately representative data. You can imagine the detrimental effects on patients who might not receive timely and correct diagnoses, potentially leading to severe health deterioration or even fatalities. As you recognize the interplay between data quality and patient care, it becomes evident that the implications of bad data in healthcare extend beyond the immediate context, influencing broader healthcare outcomes and shaping public health policies.

It is crucial to raise awareness about the necessity for high-quality, diverse, and current medical datasets in AI applications. Initiatives aimed at creating robust and comprehensive databases that reflect real-world patient demographics will significantly enhance the performance of AI diagnostic tools. You must advocate for partnerships between tech developers, healthcare professionals, and patient advocacy groups to ensure that AI systems effectively represent the population and can be trusted with making significant health decisions.

- Explore the role of AI in diagnosing diseases.

- Discuss the consequences of misdiagnosis in healthcare.

- Analyze the need for diverse patient data in AI training.

- Investigate case studies showcasing AI's diagnostic failures.

- Delve into the ethical implications of AI in healthcare.

Diagnostics in healthcare rely heavily on comprehensive and up-to-date data. When bad data enters the picture, it can skew results, lead to misdiagnoses, and ultimately compromise patient safety. Addressing these data quality issues is critical for ensuring that AI tools can accurately assist healthcare professionals in making informed decisions.

- Explain how AI is changing the landscape of healthcare diagnostics.

- Discuss the importance of data accuracy in medical algorithms.

- Describe the challenges faced by AI in healthcare data integration.

- Analyze the role of patient consent in AI data collection.

- Investigate the benefits of collaborative data sharing among institutions.

Inaccurate Financial Predictions

Examples abound when we consider the critically important area of financial predictions impacted by bad data. Financial institutions increasingly rely on AI to forecast market trends, assess risk, and guide investment strategies. However, inaccurate historical data can lead to poor forecasting, resulting in financial losses not only for the institutions but also for their clients and stakeholders. When AI systems make decisions based on incomplete or erroneous data, you will often see the cascading effects of these choices manifest as market volatility and trust erosion in the financial system.

Another significant issue occurs when AI models are trained on data sets that do not accurately reflect the current economic climate. For example, using outdated data in financial algorithms can lead to a misguided understanding of market conditions, ultimately guiding investment decisions that result in substantial financial repercussions. If the models lack adaptability to new data or fail to account for changing consumer behavior, the ramifications can be dramatic, impacting numerous industries and causing widespread economic instability.

To combat the risks associated with bad data in financial predictions, it is imperative for organizations to prioritize data integrity and continuously update models with accurate, real-time information. By investing in data quality initiatives and technology that enables agile response to market conditions, you can help protect against the pitfalls of misguided financial predictions. You should actively advocate for the importance of transparency and accuracy in AI systems, ensuring that financial forecasts are not only reliable but also contribute positively to overall market health.

- Discuss how AI can transform financial predictions.

- Analyze the impacts of inaccurate financial forecasting.

- Explore strategies for improving data quality in finance.

- Investigate the significance of real-time data in financial AI models.

- Examine case studies of financial institutions that misused AI.

Recognition of data’s centrality to accurate financial predictions cannot be overstated. The financial landscape is dynamic, and AI systems must adapt to reflect evolving conditions. As you engage with these AI technologies, understanding the importance of data quality will empower you and others to navigate potential pitfalls more effectively.

- Explain why data integrity is crucial for financial systems.

- Discuss the historical trends that influence current financial AI models.

- Explore the ethical implications of AI-driven financial decisions.

- Investigate how data analysis tools can improve prediction accuracy.

- Analyze the relationship between public trust and financial AI applications.

Another critical aspect of the examples discussed above involves the intersection of technology and societal well-being. How data is gathered, processed, and utilized in AI systems has far-reaching consequences that extend beyond mere algorithms and into the lives of individuals and communities. You must remain vigilant in ensuring that the integrity of data is upheld, fostering a future where AI complements human judgment rather than undermines it.

The Economic Consequences

Keep in mind that bad data in AI systems can trigger significant economic consequences that ripple across various sectors. The implications are not just confined to technical failures, but they extend into real-world financial impacts, disrupting businesses and affecting the bottom line. As organizational decisions are increasingly reliant on AI-driven insights, the accuracy and reliability of the underlying data become crucial not just to operational success but also to profitability and sustainability in competitive markets.

- Discuss how bad data affects operational costs in AI projects

- Explore ways businesses can mitigate financial losses from bad data

- Analyze a case study of a company that suffered due to poor data quality

Loss of Revenue and Productivity

Productivity loss is one of the most direct and measurable impacts of bad data on AI systems. When an AI solution is trained on flawed data, it produces inaccurate forecasts, which can lead to overproduction or understocking of inventory. For example, imagine you run an ecommerce business and rely on AI to predict customer demand. If your data is skewed, you may end up with excess inventory that ties up capital and incurs storage costs or, conversely, stockouts that frustrate customers and lead to lost sales. These scenarios illustrate how bad data can trap your organization in a cycle of suboptimal decision-making, ultimately compromising both revenue and operational efficacy.

Additionally, the productivity of employees can suffer when they are required to manually correct AI outputs due to data inaccuracies. This painstaking process not only detracts from their important functions but also can lead to a decline in morale. When an AI tool is expected to increase efficiency but instead becomes a source of frustration, the impact on human resources can be profound. Employees may feel disheartened, contributing to an environment where innovation is stifled, and job satisfaction declines, ultimately costing your organization even more in terms of talent retention.

The economic implications extend beyond immediate revenue losses; they compound through a business’s operations over time. Your organization risks missing out on opportunities for growth and development as resources are siphoned off to address these issues. Even when a company recognizes that poor data has led to bad decisions, the path to rectifying these mistakes often demands time and resources that could have been better allocated elsewhere. Without a grasp on how to manage and improve data quality, you significantly curb your organization’s ability to leverage AI’s full potential, adversely affecting its competitive edge in the marketplace.

- Evaluate the correlation between poor data quality and revenue decline.

- Explain how improper data handling impacts workforce engagement.

- Investigate specific industries most vulnerable to losses from data issues.

Damage to Reputation and Trust

Productivity dips resulting from bad data have a secondary effect: they can erode your brand’s reputation in the marketplace. As customers increasingly turn their attention to data-driven solutions, any hints of inconsistency or unreliability can prompt skepticism towards your offerings. If customers perceive your AI-driven products as inaccurate or flawed, it can lead to customer attrition and diminished brand loyalty. Trust once lost is challenging to regain, and the efforts required to restore that trust can demand resources far exceeding the original losses.

The long-term effects are particularly troubling for those businesses that stake their brand identity on data-driven innovation. Consumers and clients expect that companies deploying AI are leading the way in accuracy and reliability. However, when bad data leads to glaring errors or poor service, it can create public relations nightmares. Word of mouth, accelerated by social media, can amplify a single incident into a widespread perception that your organization cannot be trusted with critical information, potentially driving customers to competitors.

- Analyze how trust in AI can be rebuilt after bad data incidents

- Discuss the correlation between brand loyalty and data integrity

- Explore strategies for enhancing customer relationships amidst data challenges

Increased Costs of Rectification

This topic brings to light the often-overlooked fact that bad data doesn’t just induce initial havoc; it can considerably inflate costs associated with rectifying the errors. When anomalies arise from a faulty dataset, your organization may find itself in the position of responding through corrective actions that require significant investment. Hiring data specialists, investing in algorithms for data cleansing, and implementing upgraded data governance models can quickly lead to escalating expenses. If these issues are left unaddressed, they can also result in further operational setbacks, creating a snowball effect that rapidly intensifies financial strain.

Rectification efforts not only pull financial resources from potential growth projects, but they can also consume time and energy that could have been expended on strategic initiatives. The costs incurred in bad data rectification can compound, as teams may scramble to fix errors while simultaneously handling other responsibilities. The marathon-like pace of rectification can lead to employee burnout and affect decision-making quality. Moreover, the inherent complexity of accurate data management may necessitate the integration of additional data tools and technologies, further driving up costs. As a result, your organization’s ability to innovate and thrive in an ever-evolving landscape can become severely compromised.

- Explore different strategies to address data issues proactively.

- Assess the implications of ongoing data rectification efforts on company finances.

- Investigate the balance between immediate rectification costs versus long-term value.

A detailed understanding of the economic fallout from bad data empowers you to recognize the importance of robust data management practices. By committing to data quality, transparency, and accountability, your business can navigate and mitigate these issues, reinforcing its market standing and promoting sustainable growth in an AI-driven era.

- Discuss the importance of data governance frameworks.

- Evaluate the role of employee training in data management initiatives.

- Suggest technological tools that can enhance data accuracy and reliability.

The Social Implications

After exploring the technical ramifications of bad data on AI systems, it’s crucial to tackle the broader social implications that emerge from these same challenges. You may already be aware that data does not exist in a vacuum; it reflects the human context, values, and biases that are inherently present in the world. When faulty or biased data is fed into AI algorithms, the resulting systems can reproduce and even amplify existing societal issues. Hence, you must consider how this affects various aspects of social fairness, equity, and trust in technology. The consequences can ripple through our communities, influencing real-world interactions and the fabric of society.

### Discrimination and Unfair Treatment

The use of AI often intersects with various aspects of governance, finance, employment, and law enforcement, leading to serious implications for social equity. When algorithms trained on bad data are deployed to make decisions—such as hiring practices, loan approvals, or criminal sentencing—they can inadvertently discriminate against certain groups. You know that historical biases in datasets can reflect existing inequalities; for instance, if an AI system is trained predominantly on data from privileged demographics, it may unfairly disadvantage minorities and low-income individuals. Imagine the ripple effect: individuals might find themselves unfairly screened out from job opportunities or unjustly penalized by a biased judicial system.

Moreover, bad data can reinforce systemic disparities by providing skewed data that misrepresents the realities faced by disadvantaged groups. You may realize that when AI prioritizes efficiency over ethical considerations, it can lead to automation that fails to take nuanced human contexts into account. For instance, automated decision-making systems that are not rigorously tested for fairness might recommending policies that neglect the specific needs of less privileged communities, leaving them further marginalized and disenfranchised. Therefore, it is imperative for developers and policymakers to be vigilant about data integrity and its potential social repercussions.

You should also be aware that, in a world increasingly reliant on automation, the risk of discrimination poses not just an ethical challenge but also a threat to social cohesion. Trust in AI systems is built upon transparent, fair processes, and when people see that AI decisions are fueled by biased or flawed data, their trust erodes. As AI continues to evolve, the stakes become higher, and the imperative to address bad data is no longer a concern for technologists alone; it is a societal issue that necessitates collaborative awareness and action.

- How does discrimination manifest in AI systems?

- What are the examples of unfair treatment caused by AI?

- How can you reduce bias when developing AI algorithms?

- What are the moral responsibilities of AI developers regarding bias?

- What is the impact of discriminatory AI on society?

### Erosion of Public Trust in AI

The introduction and application of AI technologies have the potential to greatly benefit society; however, bad data can lead to significant missteps that erode public trust in such systems. The loss of trust can stem from experiences where individuals have encountered biased outcomes from AI implementations, whether in the job market, healthcare assessments, or financial services. When you witness or hear about these injustices, it naturally makes you wary of AI as a whole. You might start to question whether reliance on AI for important decisions—affecting your life or the lives of others—is wise.

A crucial element in sustaining public trust is the belief that AI systems are operating under a framework of fairness and accountability. When you learn that an AI-driven decision is based on flawed, biased, or incomplete data, it compels you to doubt the effectiveness and reliability of such technologies. This erosion of trust could lead individuals to disengage from using AI systems altogether, undermining innovations that could otherwise serve the common good. Thus, conveying a strong commitment to data integrity and ethical considerations in AI development is imperative for organizations aiming to retain the public’s faith in their technologies.

You may also consider that a loss of public trust in AI could have wider societal implications, where citizens begin scrutinizing the motivations of institutions that deploy AI. When you see mistrust brewing, it can foster skepticism toward advancements in technology, impeding progress across sectors. It is not merely a matter of reassurance; instead, it reflects a fundamental need for transparent practices that inspire confidence in those systems. Without this confidence, the transformative potential of AI can be stymied, leaving society at large to grapple with the missed opportunities for improvement and equity.

- What leads to the erosion of public trust in AI?

- How can organizations rebuild trust in AI technologies?

- What are the consequences of distrust in AI for society?

- How can transparency in AI processes enhance public trust?

- Why is trust in AI crucial for its adoption?

Erosion of public trust in AI doesn’t just happen overnight; rather, it builds up as inaccuracies and biases manifest over time within the systems that society relies on. When individuals receive biased outputs or face decisions that adversely affect their lives, you may observe an instinctual reaction to grow skeptical of the underlying technology. As trust directly influences user engagement and adoption rates, if you notice widespread public disillusionment, it can spell trouble for future advancements and the overall acceptance of AI-driven solutions. In essence, bad data can lead to a cycle of distrust that can hinder meaningful integration of AI in areas where it could truly excel.

- How does public perception shape the future of AI?

- What strategies can combat the erosion of trust in AI?

- What role do governments play in maintaining trust in AI?

- How can education improve public understanding of AI?

- Why is understanding user concerns critical for AI developers?

### Unequal Access to Opportunities and Resources

It is imperative to acknowledge that bad data may lead to unequal access to opportunities and resources, especially as society leans more into AI-driven methodologies for decision-making. When algorithms are based on skewed datasets, you might find that marginalized communities are disproportionately affected, denying them access to the same opportunities afforded to others. For example, if you consider financial lending decisions informed by historical data that reflects economic disparities, bias can perpetuate the cycle of poverty by restricting access to credit for those who need it most.

Moreover, you may come to understand that education and employment sectors are not immune to these issues either; AI systems used to filter job candidates can inadvertently prioritize individuals whose backgrounds align with the dominant demographic in the dataset. This perpetuates a cycle where those from underrepresented groups face barriers to entry, further exacerbating social inequality and stifling diversity in fields that thrive on varied perspectives. In scenarios like this, bad data acts as a thief, robbing communities of opportunities that may lead to economic mobility, innovation, and social progress.

Consequently, you should recognize that unequal access to resources is not just a technical problem but a moral one as well. As AI continues to be embedded in critical areas of public and private life, you might feel empowered to advocate for fairer data collection practices, ensuring that diverse voices and experiences objectify the information that drives these systems. By pushing for change and accountability, you can help to create an equitable landscape where AI tools serve to level the playing field rather than reinforce an unjust status quo.

- How does AI influence access to opportunities?

- What strategies exist to address unequal access due to bad data?

- What are the implications of unequal resource distribution in society?

- How do marginalized communities utilize AI for opportunities?

- What role does data literacy play in addressing resource inequalities?

Trust plays a pivotal role in addressing the theme of unequal access. You might realize that without trust, individuals may hesitate to engage with technologies designed to create new opportunities. If AI systems are perceived as amplifying existing inequalities rather than alleviating them, such distrust can further deepen the divides within society. Therefore, encouraging inclusivity and transparency within AI initiatives is imperative for fostering equitable access to resources and opportunities for all—especially those who have historically been sidelined.

- How can trust empower marginalized communities in AI?

- What are the consequences of distrust on accessibility to opportunities?

- How does trust relate to public policy in AI?

- What steps can organizations take to build trust with underserved populations?

- Why is accountability important in ensuring equitable access to AI resources?

Regulatory Measures and Standards

Unlike other technological advancements, the deployment and optimization of Artificial Intelligence (AI) hinge significantly on the integrity of the data fed into these systems. Bad data can lead to detrimental consequences, not just for efficiency but also for ethical considerations that can arise from inaccurate or biased algorithms. Therefore, a strong regulatory framework is crucial to creating a standardized environment where reliable data practices are prioritized. This is where regulatory measures and standards come into play, shaping how industries handle data and ensuring accountability at every level.

Government Initiatives and Guidelines

Government initiatives play a pivotal role in establishing the necessary guidelines to mitigate the impact of bad data in AI. In various jurisdictions, agencies are formulating frameworks that outline best practices for data collection, management, and usage, ensuring that organizations are held accountable for the quality of their data. These guidelines not only serve to protect the end-users and consumers but also foster public trust in AI technologies. You might find that such initiatives encompass a range of recommendations—from transparency in data sourcing to scrupulous auditing processes aimed at identifying and eliminating data biases.

Moreover, many governments are investing in public awareness campaigns to educate stakeholders about the importance of data quality and its implications for AI. These campaigns underscore the potential risks posed by bad data, advocating for a culture that prioritizes data integrity from inception to deployment. Organizations are encouraged to establish robust data governance frameworks that align with the established guidelines, helping them not only to comply with regulations but also to enhance their overall data practices and governance. This push for awareness and structured practices can lead to more reliable AI systems that serve society better and mitigate harms associated with poor data quality.

Additionally, collaboration between government entities and tech organizations is increasingly seen as vital for the success of these guidelines. Task forces and committees dedicated to AI and data ethics are emerging, bringing together industry leaders, policymakers, and researchers to share insights and develop cohesive strategies. Such partnerships are crucial in adapting to rapid technological changes and continuously evolving threats, ensuring that regulatory measures evolve in tandem with advancements in AI technology. You can see this ongoing dialogue as a way to create frameworks that are not only practical but also reflect a deep understanding of both technology and societal needs.

What are the latest government initiatives to improve data quality for AI?

How can organizations ensure compliance with government guidelines on data management?

What role does public awareness play in government efforts to regulate AI data practices?

Explain the importance of collaboration between governments and tech organizations in establishing AI regulations.Industry-Wide Best Practices and Certifications

Regulatory bodies have recognized the need for industry-wide best practices to complement governmental efforts, creating a cohesive approach to data management in AI. You may find that certifications are being established to verify that organizations adhere to these best practices, which encompass comprehensive data governance, ethical data sourcing, and transparent algorithm designs. These certifications signal to consumers and partners that an organization is committed to maintaining high standards in data integrity—a critical aspect in the age of AI. You should be aware that obtaining such certifications can not only provide competitive advantages but also facilitate trust between stakeholders.

The development of these best practices is often a collaborative process, with various industry leaders and expert groups coming together to identify key areas for standardization. This may include guidelines on data anonymization techniques, protocols for bias detection and mitigation, and ethical considerations for using AI in sensitive applications. Organizations that actively engage in these standardization efforts find themselves not only equipped with better data handling procedures but also positioned to lead in an increasingly conscientious market. Furthermore, they tend to foster innovation by implementing cutting-edge data quality assurance practices.

By adopting industry-wide best practices and pursuing certifications, organizations can actively contribute to the establishment of a more reliable and ethical AI landscape. Regulatory frameworks are best supported when industry players actively engage in adhering to established standards and advocating for continuous improvement. It becomes a shared responsibility where you, as a professional in the AI field, have a significant role in fostering a culture of accountability, transparency, and trustworthiness in data practices which, in turn, reinforces the credibility of AI systems themselves.

What are some recognized certifications for data management in AI?

List best practices for data ethics and governance that organizations should adopt.

How do certifications influence consumer trust in AI applications?

What benefits do organizations gain from pursuing industry-wide standards in data management?Regulatory measures should not just be limited to national laws but extend to international recognition of best practices and certifications for AI. It is necessary to build a consensus on what constitutes acceptable data practices across borders, as the challenges of bad data often transcend geographical boundaries. Organizations with a global presence must navigate an intricate web of international regulations that affect how they handle data, making it imperative to engage in discussions that yield standardized protocols applicable worldwide.

What are the challenges of achieving international standards in data management for AI?

How can organizations ensure compliance with varying international regulations on data?

What role do international certifications play in harmonizing data standards across countries?

Provide examples of successful international cooperation in establishing data standards for AI.The Need for International Cooperation

Guidelines from various countries demonstrate a clear consensus that international cooperation is imperative when it comes to setting standards for data integrity in AI. You may recognize that the complexities of data flow across borders make it critical for professionals and organizations to engage with one another to develop shared frameworks that address the nuances of diverse systems and regulatory environments. International collaboration can lead to the creation of universal guidelines that not only govern data handling but also promote ethical considerations in AI deployment, ensuring that solutions are both effective and socially responsible.

Furthermore, as AI development accelerates globally, the need for synchronized standards becomes increasingly apparent. When countries engage in cooperative efforts, they facilitate knowledge sharing and the adoption of best practices tailored to their unique socio-economic contexts. This could involve cross-border partnerships for research and the development of AI technologies that are built upon sound data foundations. You should be aware that such collaborative efforts can lead to strengthened regulatory frameworks, as diverse viewpoints can enrich discussions about the implications of data quality and ethical AI use.

Ultimately, international cooperation nurtures a sense of accountability that transcends borders. This becomes a vital foundation on which to build trust not only among countries but also between organizations and consumers. With the potential for bad data to mislead AI outcomes and societal progress, ensuring a unified approach to data integrity is key to harnessing the full potential of AI responsibly. This means that you play a crucial role in this process as a stakeholder in the AI landscape, where your commitments to best practices can contribute to larger global initiatives.

What are the requisite commitments for countries to cooperate on data standards in AI?

How can organizations contribute to international efforts aimed at improving data integrity?

What are the potential benefits of harmonized data standards for global AI deployment?

Describe the impact of cultural differences on cooperation regarding international data guidelines.Certifications play a critical role in establishing frameworks for international cooperation as they set universal benchmarks for data quality. These certifications assure that organizations meet specific, internationally recognized standards, fostering a culture of accountability across borders. As such, the credibility of your organization will be strengthened when you pursue these internationally recognized certifications, highlighting your commitment to maintaining high data standards that support responsible AI use in any market.

What certifications are recognized internationally for data management in AI?

How can certifications assist organizations in navigating international data regulations?

Discuss the role of certifications in facilitating international cooperation on data standards.

What are the benefits of maintaining high data integrity in an internationally certified environment?Plus, these frameworks not only guide your organization’s practices but also elevate the overall industry standards across various sectors leveraging AI. Engaging with the regulatory landscape can lead to better data practices, which are necessary in ensuring that AI systems function correctly and ethically. Thus, when you commit to high standards, you contribute to a more substantial collective movement towards ethical AI and responsible data stewardship.

The Role of Human Oversight

Your engagement with Artificial Intelligence (AI) is vital in mitigating the risks posed by bad data. As AI systems become increasingly complex, the role of human oversight becomes paramount. You must understand that while AI can process vast amounts of data, it relies heavily on humans to inform, refine, and validate its outputs. Without the critical judgment and contextual awareness that you, as a human, provide, AI misinterpretations can occur, leading to erroneous conclusions and potentially dangerous consequences. This oversight not only helps in correcting mistakes but also in recognizing the latent biases that may exist within the data being processed.