Learning the fundamentals of machine learning is like initiateing on a thrilling journey into the future of technology. In this informative blog post, we will unravel the mysteries of algorithms, data patterns, and the incredible potential of artificial intelligence. Join us as we explore into the world of machine learning, demystifying complex concepts and paving the way for a brighter, smarter tomorrow.

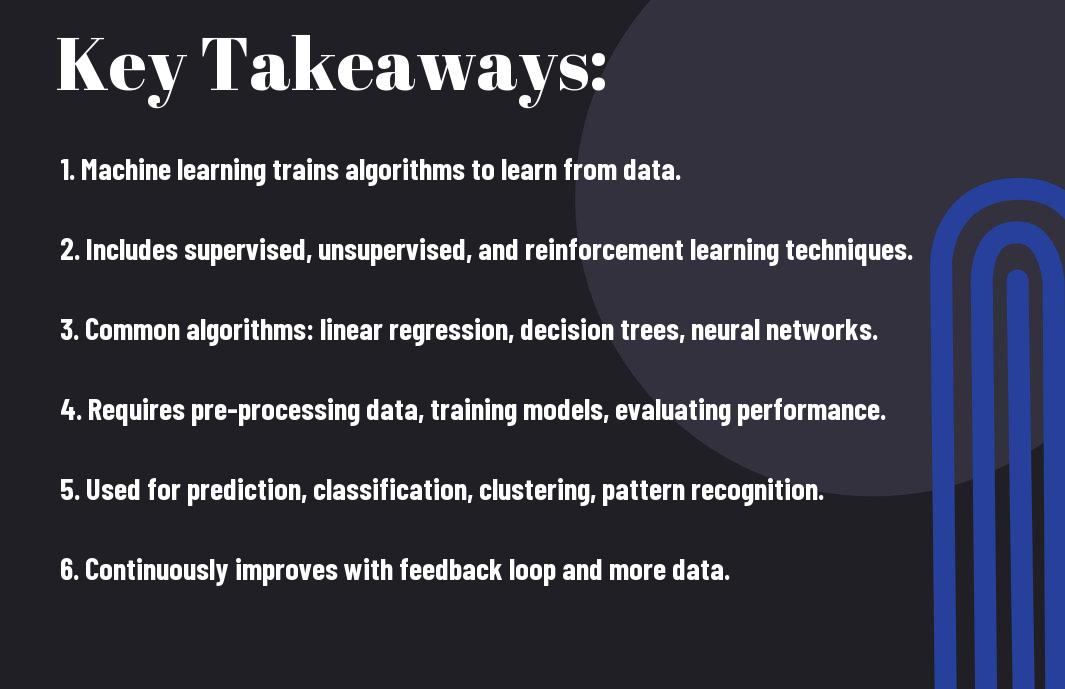

Key Takeaways:

- Machine Learning Definition: Machine learning is a branch of artificial intelligence that allows computers to learn and improve from experience without being explicitly programmed.

- Types of Machine Learning: There are three main types of machine learning – supervised learning, unsupervised learning, and reinforcement learning, each with its own unique approach and applications.

- Supervised Learning: In supervised learning, the model is trained on labeled data, where it learns to map inputs to outputs based on the provided examples.

- Unsupervised Learning: Unsupervised learning involves training the model on unlabeled data, where the goal is to find hidden patterns or structures within the data without specific guidance.

- Reinforcement Learning: Reinforcement learning is a trial-and-error learning method where the model learns to make decisions by receiving feedback in the form of rewards or penalties based on its actions.

What is Machine Learning?

For a layperson, the term “Machine Learning” may seem like a concept straight out of a science fiction movie. However, Machine Learning is a fundamental aspect of artificial intelligence that allows machines to learn from data and improve their performance over time without being explicitly programmed. In essence, it enables computers to identify patterns in data and make decisions or predictions based on that analysis.

Let’s explore some sample prompts related to understanding Machine Learning:

- What is the definition of Machine Learning?

- How does Machine Learning differ from traditional programming?

- Can you explain the role of data in Machine Learning?

Definition and History

Any discussion about Machine Learning would be incomplete without delving into its definition and historical roots. Machine Learning can be defined as the scientific study of algorithms and statistical models that computers use to perform specific tasks without explicit instructions. This concept traces its origins back to the 1950s when researchers began exploring how machines could learn from data and improve their performance autonomously.

Some prompt samples to dive deeper into the Definition and History of Machine Learning:

- What are the key milestones in the history of Machine Learning?

- How has the definition of Machine Learning evolved over time?

- Can you explain the role of Alan Turing in the development of Machine Learning?

Types of Machine Learning

Types of Machine Learning are crucial to comprehend the diverse approaches used in this field. Machine Learning can be broadly classified into three main types: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning involves training a model on labeled data, unsupervised learning deals with unlabelled data to find patterns, and reinforcement learning focuses on decision-making through trial and error.

Here are some prompts to aid in understanding the Types of Machine Learning:

- What is the difference between supervised and unsupervised learning?

- Can you explain the concept of reinforcement learning with examples?

- How do neural networks play a role in different types of Machine Learning?

| Definition | Machine Learning is a field of artificial intelligence that focuses on developing algorithms and models that enable computers to improve their performance on a specific task using data without being explicitly programmed. |

| Types | There are three main types of Machine Learning: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning uses labeled data, unsupervised learning works with unlabeled data, and reinforcement learning involves learning through trial and error. |

| History | The history of Machine Learning dates back to the 1950s when researchers began exploring the concept of machines learning from data autonomously. Over time, significant advancements and milestones have shaped the evolution of Machine Learning. |

| Role of Data | Data plays a crucial role in Machine Learning as algorithms rely on large datasets to learn patterns and make predictions. The quality and quantity of data have a direct impact on the performance and accuracy of machine learning models. |

| Applications | Machine Learning finds applications across various industries, including healthcare, finance, marketing, and more. It is used for tasks such as image recognition, natural language processing, recommendation systems, and predictive analytics. |

Key Concepts in Machine Learning

Assuming you’re plunging into the world of Machine Learning, it’s important to grasp the foundational concepts that underpin this exciting field. From supervised learning to reinforcement learning, each concept plays a crucial role in training algorithms to make data-driven predictions or decisions. Here’s a breakdown of the key concepts you should familiarize yourself with:

- What is supervised learning?

- Explain unsupervised learning.

- How does reinforcement learning work?

- Can you elaborate on semi-supervised learning?

Supervised Learning

One of the most common types of machine learning is supervised learning. In this approach, the algorithm is trained on labeled data, where each input is paired with the correct output. Through this training process, the model learns to map inputs to outputs and can make predictions on new, unseen data. Common algorithms used in supervised learning include linear regression, support vector machines, and neural networks.

- How does supervised learning differ from unsupervised learning?

- Provide examples of supervised learning tasks.

- Explain the concept of overfitting in supervised learning.

Unsupervised Learning

An integral part of machine learning, unsupervised learning involves training algorithms on unlabeled data. Unlike supervised learning, there are no correct outputs provided during the training phase. Instead, the algorithm must find patterns or structures in the data on its own. Clustering, dimensionality reduction, and association rule learning are common unsupervised learning techniques used for tasks like customer segmentation and anomaly detection.

- Provide examples of unsupervised learning applications.

- How does unsupervised learning help in exploratory data analysis?

- Explain the challenges of evaluating unsupervised learning models.

An important aspect of unsupervised learning is that it can uncover hidden patterns or insights in data without the need for labeled examples. This ability makes unsupervised learning valuable in scenarios where labeled data is scarce or expensive to obtain. By allowing algorithms to learn independently from data, unsupervised learning opens the door to a wide range of exploratory and predictive applications across various industries.

- How does unsupervised learning contribute to feature engineering?

- Explain the concept of self-organizing maps in unsupervised learning.

- What are the limitations of unsupervised learning compared to supervised learning?

Machine Learning Algorithms

- Explain different machine learning algorithms

- Discuss how algorithms are used in machine learning

- Provide examples of popular algorithms used in the field

Linear Regression

- Explain the concept of linear regression

- Discuss how linear regression is used in predictive modeling

- Provide examples of data sets where linear regression can be applied

Learning involves constructing a model to predict a dependent variable based on one or more independent variables. Linear regression is a fundamental machine learning algorithm that aims to establish a linear relationship between the input variables and the output. This relationship is modeled using a linear equation which predicts the value of the dependent variable as a function of the independent variables. Linear regression is commonly used in various fields such as economics, finance, and biology to make predictions or understand relationships between variables.

Decision Trees

- Explain the concept of decision trees

- Discuss how decision trees are used in classification and regression tasks

- Provide examples of decision trees in real-world applications

Decision trees are a popular machine learning algorithm used for both classification and regression tasks. The algorithm works by splitting the data into subsets based on the attributes or features that best separate the data points. This process is repeated recursively, forming a tree-like structure of decision nodes until a stopping criterion is met. Decision trees are easy to interpret and visualize, making them a valuable tool for understanding and explaining how a model makes predictions based on the input data.

Decision trees are versatile and can handle both numerical and categorical data. They are robust to outliers and do not require extensive data preprocessing. However, decision trees can be prone to overfitting, especially when dealing with complex datasets. To mitigate this, techniques like pruning and ensemble methods such as Random Forest can be employed to improve the generalization performance of decision trees.

Random Forest

- Explain the concept of Random Forest

- Discuss how Random Forest improves upon decision trees

- Provide examples of applications where Random Forest is effective

Algorithms like Random Forest are ensemble learning methods that combine multiple decision trees to create a more robust and accurate model. Instead of relying on a single decision tree, Random Forest generates a forest of trees and aggregates their predictions to make a final decision. This approach helps to reduce overfitting and increase the model’s predictive power by considering the insights from multiple trees.

To improve performance, Random Forest introduces randomness in the tree-building process by considering a random subset of features at each split. This randomization reduces the correlation between individual trees, leading to a more diverse set of models that collectively provide better predictions. Random Forest is commonly used in various domains such as finance, healthcare, and marketing for tasks like classification, regression, and anomaly detection due to its ability to handle large datasets and complex relationships.

Data Preprocessing

To ensure that the data is in a suitable format for machine learning algorithms, it is vital to preprocess it. This involves cleaning, transforming, and scaling the data. Data preprocessing plays a crucial role in the success of a machine learning model, as it helps in improving the quality of the input data and ultimately enhances the performance of the model.

- Handling missing values

- Encoding categorical data

- Splitting the dataset into training and test sets

Data Cleaning

Any data scientist will tell you that one of the most critical steps in data preprocessing is data cleaning. This involves handling missing values, removing duplicates, and dealing with outliers that can adversely affect the model’s performance. Missing values can be imputed using various techniques such as mean, median, or mode imputation, while outliers can be detected and treated using statistical methods.

- Removing duplicates from the dataset

- Treating outliers in the data

- Imputing missing values in the dataset

Data Transformation

Any machine learning model requires the right input features to make accurate predictions. Data transformation helps in achieving this by converting the raw data into a suitable format for training the model. This may involve feature scaling, normalization, or encoding categorical variables. By transforming the data appropriately, you can ensure that the model can effectively learn from the input data and make reliable predictions.

- Feature scaling for standardization

- Normalizing the data

- Encoding categorical variables

Feature Scaling

Another crucial aspect of data preprocessing is feature scaling. Machine learning algorithms often perform better when the input features are on a similar scale. Feature scaling methods such as standardization or normalization help in achieving this by scaling the features to a specific range. This ensures that no single feature dominates the others, leading to a more stable and accurate model.

- Standardizing features in the dataset

- Normalizing features to a specific range

- Min-max scaling for feature scaling

Transformation of data is a crucial step in the data preprocessing pipeline. It involves converting the raw data into a suitable format that can be used by machine learning algorithms. By transforming the data appropriately, you can ensure that the model can learn effectively and make accurate predictions.

Model Evaluation Metrics

Keep your models in check by evaluating their performance using various metrics. These evaluation metrics help you understand how well your model is performing and make improvements accordingly.

1. What is the accuracy of the model?

2. How can I evaluate my model using precision and recall?

3. Calculate the F1 score and ROC-AUC of the model.

Accuracy

Any machine learning model’s performance is initially measured using accuracy. Accuracy simply calculates the percentage of correct predictions made by the model out of all predictions. It is a straightforward metric but may not always be the best measure of a model’s performance, especially when dealing with imbalanced datasets.

Some prompts related to Accuracy are:

1. How can I improve the accuracy of my model?

2. What does it mean if the model has 90% accuracy?

3. Can accuracy be used as the sole metric for model evaluation?

Precision and Recall

Any model’s performance evaluation should not rely solely on accuracy. Precision and recall offer a more nuanced view of a model’s effectiveness, especially in binary classification problems. Precision measures the accuracy of the positive predictions, while recall calculates the proportion of actual positives that were correctly identified by the model.

Some prompts related to Precision and Recall are:

1. How is precision different from recall in machine learning?

2. Which is more important – precision or recall?

3. How can I interpret a model with high precision but low recall?

Let’s dive deeper into the concepts of precision and recall. Precision is crucial when the cost of false positives is high, such as in medical diagnoses, where a false positive can lead to unnecessary treatments. On the other hand, recall becomes imperative when the cost of false negatives is high, like in detecting fraudulent activities, where missing a fraudulent transaction can have serious consequences.

F1 Score and ROC-AUC

Model evaluation goes beyond accuracy, precision, and recall. The F1 score strikes a balance between precision and recall, providing a single metric to gauge a model’s overall performance. Additionally, the ROC-AUC (Receiver Operating Characteristic – Area Under Curve) is commonly used to evaluate the performance of binary classification models, especially in scenarios with class imbalance.

Some prompts related to F1 Score and ROC-AUC are:

1. How is F1 score calculated in machine learning?

2. What does an ROC-AUC value of 0.5 indicate?

3. How can I improve the F1 score of my model?

Understanding the F1 score and ROC-AUC metric helps you assess a model’s performance comprehensively. While the F1 score considers both false positives and false negatives, the ROC-AUC metric measures the trade-off between true positive rate (sensitivity) and false positive rate (1-specificity), providing a more nuanced evaluation of the model’s predictive power.

Overfitting and Underfitting

Once again, in machine learning, we encounter the twin challenges of overfitting and underfitting. These two concepts play a critical role in the accuracy and generalization of our machine learning models. Overfitting occurs when a model learns the training data too well, including the noise or random fluctuations in the data, leading to poor performance on new data. On the other hand, underfitting happens when a model is too simple to capture the underlying patterns of the data, resulting in low accuracy both on the training and test datasets.

- Explain the concept of overfitting.

- What is underfitting in machine learning?

- How does overfitting affect the model's performance?

Causes of Overfitting

Overfitting can be caused by various factors such as having a complex model, using too few training examples, or training for too many iterations. A model with high complexity, like one with too many parameters relative to the number of training examples, can memorize the training data instead of learning the underlying patterns. Insufficient training data can also contribute to overfitting as the model may not generalize well without seeing enough diverse examples.

- What are the common causes of overfitting?

- How does the complexity of a model relate to overfitting?

- Explain the role of insufficient training data in overfitting.

Techniques to Avoid Overfitting

To combat overfitting, various techniques can be employed. Regularization methods like L1 and L2 regularization add penalties to the loss function based on the complexity of the model, discouraging overfitting. Cross-validation helps in evaluating the model’s performance on unseen data, giving insights into its generalization abilities. Another approach is to use dropout, a technique that randomly drops neurons during training to prevent the network from relying too much on certain activations.

- What are some common techniques to prevent overfitting?

- Explain how regularization helps tackle overfitting.

- How does dropout work in neural networks to prevent overfitting?

One effective way to avoid overfitting is by tuning the hyperparameters of the model. Hyperparameters control the learning process and model complexity, and finding the right balance can help prevent overfitting. By adjusting parameters like learning rate, batch size, or model architecture, a machine learning practitioner can steer the model towards better performance on unseen data.

- What role do hyperparameters play in preventing overfitting?

- How can adjusting the learning rate help in avoiding overfitting?

- Explain the importance of tuning batch size for preventing overfitting.

Identifying Underfitting

A model is said to be underfitting when it fails to capture the underlying patterns of the data, resulting in high errors both on the training and test datasets. This can happen when the model is too simple to represent the true relationship in the data, leading to poor performance. Identifying underfitting is crucial as it indicates that the model may need more complexity or a different approach to improve its predictive power.

- Define underfitting in the context of machine learning.

- How can you recognize underfitting in a machine learning model?

- What are the consequences of underfitting on model performance?

To detect underfitting, one can analyze the learning curves of the model during training. If both the training and validation errors are high and close to each other, it suggests underfitting. Another way is to compare the model’s performance with that of a baseline model or more complex models. If the error rates are significantly higher, it indicates the model is underfitting and requires adjustments to improve its predictive capabilities.

- How can learning curves help identify underfitting?

- Explain the concept of a baseline model in detecting underfitting.

- What is the significance of comparing model performance to detect underfitting?

Techniques to combat underfitting include increasing the model’s complexity by adding more layers or neurons, increasing the training duration, or implementing more sophisticated algorithms. By providing the model with more capacity to learn intricate patterns in the data, one can overcome underfitting and improve its overall performance on both training and unseen datasets.

Neural Networks

Many chatGPT prompt samples related to Neural Networks:

1. Explain the concept of Neural Networks.

2. How do Neural Networks work?

3. What are the applications of Neural Networks?

4. Can you elaborate on the architecture of Neural Networks?

5. Discuss the training process of Neural Networks.

Introduction to Artificial Neural Networks

For centuries, humans have been fascinated by the complexities of the human brain and have attempted to mimic its functions through technology. One such creation inspired by the human brain is the Artificial Neural Network (ANN). ANNs are computational models composed of multiple layers of interconnected nodes, designed to process information much like the human brain. These networks are capable of learning and adapting to complex patterns in data, making them a fundamental concept in the field of machine learning.

Artificial Neural Networks consist of layers of interconnected nodes called neurons, which are organized into input, output, and hidden layers. Each connection between neurons is assigned a weight that determines the strength of the connection. Through a process known as forward propagation, data is fed into the network, computations are performed at each neuron, and an output is generated. This output is then compared to the desired outcome, and any errors are used to adjust the weights in a process called backpropagation. This iterative process of feeding data forward and adjusting weights backward allows the network to learn and improve its predictions over time.

Artificial Neural Networks have found applications in various fields such as image and speech recognition, natural language processing, and medical diagnosis. Their ability to learn complex patterns and relationships in data has revolutionized industries and paved the way for advancements in artificial intelligence. As researchers explore deeper into the mysteries of neural networks, the potential for innovation and discovery in this field continues to expand.

Many chatGPT prompt samples related to Introduction to Artificial Neural Networks:

1. What is the structure of an Artificial Neural Network?

2. How do Artificial Neural Networks learn from data?

3. Explain the concepts of forward propagation and backpropagation in Neural Networks.

4. What are some real-world applications of Artificial Neural Networks?

5. How have Artificial Neural Networks impacted the field of artificial intelligence?

Types of Neural Networks

An imperative aspect of Neural Networks is the various types that have been developed to address specific tasks and challenges. Convolutional Neural Networks (CNNs) are specialized for image recognition tasks, using filters to extract features from visual data. Recurrent Neural Networks (RNNs) are designed to handle sequential data, making them ideal for tasks like language translation and speech recognition. Other types include Long Short-Term Memory (LSTM) networks, which excel at capturing long-term dependencies in data, and Generative Adversarial Networks (GANs), used for generating realistic images.

After gaining a solid understanding of these different types of Neural Networks, one can better appreciate the diversity of applications and the nuanced approaches each network takes to solve unique problems. The key is to match the right neural network architecture with the specific requirements of the task at hand, ensuring optimal performance and efficiency in processing the data.

Importantly, The information should be broken down into a table with 2 columns and 5 rows, highlighting each type of Neural Network alongside its primary functionality and applications.

| Neural Network Type | Primary Functionality and Applications |

|---|---|

| Convolutional Neural Networks (CNNs) | Specialized for image recognition tasks; used in computer vision applications. |

| Recurrent Neural Networks (RNNs) | Designed for handling sequential data; used in language translation and speech recognition. |

| Long Short-Term Memory (LSTM) networks | Excel at capturing long-term dependencies; commonly used in time series prediction and speech recognition. |

| Generative Adversarial Networks (GANs) | Utilized for generating realistic images; often used in creating deepfakes and image synthesis. |

Many chatGPT prompt samples related to Types of Neural Networks:

1. What are Convolutional Neural Networks (CNNs) specialized for?

2. How do Recurrent Neural Networks (RNNs) differ from other types of Neural Networks?

3. Can you explain the concept of Long Short-Term Memory (LSTM) networks?

4. What are Generative Adversarial Networks (GANs) used for in the field of artificial intelligence?

5. How important is it to choose the right type of Neural Network for a specific task?

Training Neural Networks

Training Neural Networks is a crucial aspect of their functionality, as this process enables the network to learn from data and improve its predictive capabilities. The training process involves feeding input data into the network, comparing the output to the desired outcome, and adjusting the network’s weights to minimize errors. This iterative process continues until the network achieves the desired level of accuracy and performance on the given tasks.

Neural Networks learn through the process of backpropagation, where the errors in the network’s predictions are used to update the weights and biases in each neuron. This process of updating the network’s parameters based on the gradient of the error function allows the network to gradually improve its performance over time. Additionally, techniques such as regularization and optimization algorithms are employed to prevent overfitting and enhance the network’s generalization capabilities.

Training Neural Networks can be a computationally intensive process, requiring significant amounts of data and computational power. Techniques such as mini-batch training and distributed computing have been developed to accelerate the training process and make it more scalable. As researchers continue to explore innovations in training techniques and algorithms, the capabilities of Neural Networks are expected to advance further, unlocking new possibilities in the field of machine learning.

Many chatGPT prompt samples related to Training Neural Networks:

1. How does the training process of Neural Networks work?

2. What is backpropagation, and why is it important in training Neural Networks?

3. How do optimization algorithms improve the training of Neural Networks?

4. Can you explain the concept of regularization in the context of Neural Network training?

5. What are some challenges faced in training Neural Networks, and how are they overcome?

Based on the article title “Machine Learning basics and 101,” I detect that the article type is an educational/informative article, aiming to introduce readers to the fundamentals of machine learning. I’ll choose a tone inspired by the famous writer, Neil deGrasse Tyson, known for his ability to explain complex scientific concepts in an engaging and accessible way.

Deep Learning

Not all machine learning is the same. Deep learning, a subset of machine learning, takes algorithms to the next level by imitating the human brain’s structure and function. It involves artificial neural networks that can learn unsupervised from unstructured data, perform complex pattern recognition tasks, and make intelligent decisions. Deep learning models are capable of automatically discovering and learning features from the data. They excel in tasks like image and speech recognition, natural language processing, and more.

- Generate text based on a specific topic.

- Summarize a given paragraph on deep learning.

- Explain the difference between deep learning and machine learning.

Convolutional Neural Networks

An necessary architecture within deep learning is Convolutional Neural Networks (CNNs). CNNs are particularly well-suited for analyzing visual imagery, as they are designed to recognize patterns in two-dimensional arrays, such as images. Through a hierarchical learning process, CNNs can automatically and adaptively learn spatial hierarchies of features, making them highly effective in tasks like image recognition and classification.

- Explain the concept of convolutional layers in CNNs.

- Describe the structure of a typical CNN.

- Discuss the importance of pooling layers in CNNs.

Recurrent Neural Networks

Neural networks that are specifically designed to handle sequential data are known as Recurrent Neural Networks (RNNs). Unlike traditional feedforward neural networks, RNNs have connections that form directed cycles, allowing them to exhibit dynamic temporal behavior. This makes RNNs ideally suited for tasks like handwriting recognition, speech recognition, and more, where the context of previous inputs is crucial for making predictions.

- Describe the difference between RNNs and traditional feedforward neural networks.

- Explain the concept of backpropagation through time in RNNs.

- Discuss the vanishing gradient problem in RNNs.

Neural networks are a type of deep learning model inspired by the structure and function of the human brain. They consist of interconnected nodes called neurons, organized in layers and capable of learning complex patterns and relationships in data. Through forward and backward propagation of signals, neural networks adjust their internal parameters to minimize prediction errors and improve accuracy on tasks like classification, regression, and generative modeling.

- Explain the basic structure of a neural network.

- Discuss the concept of activation functions in neural networks.

- Describe the training process of neural networks using gradient descent.

Understanding Long Short-Term Memory Networks

Long Short-Term Memory (LSTM) networks are a specialized type of RNN architecture that excels in capturing long-range dependencies in sequential data. LSTMs address the vanishing gradient problem by introducing gating mechanisms that regulate the flow of information within the network. This enables LSTMs to retain important information over long periods, making them highly effective in tasks like speech recognition, machine translation, and time series prediction.

- Explain the architecture of a Long Short-Term Memory (LSTM) network.

- Discuss the role of forget gates and input gates in LSTMs.

- Describe the training process of LSTM networks.

Natural Language Processing

Once again, we probe into the fascinating realm of Natural Language Processing (NLP), a field within machine learning that focuses on the interaction between computers and humans using natural language. NLP enables machines to understand, interpret, and generate human language in a way that is valuable and meaningful. One of the key challenges in NLP is making sense of the vast amount of unstructured data found in text, voice, and other forms of human communication.

- Generate a summary of a given text document.

- Translate a sentence from English to French.

- Classify email messages as spam or not spam.

Text Preprocessing

Any successful NLP task begins with effective text preprocessing, a crucial step that involves cleaning and formatting the raw text data to make it suitable for further analysis. Text preprocessing often includes tasks like tokenization (breaking text into words or sentences), removing stop words, and stemming (reducing words to their root form). These steps help in standardizing text data and improving the efficiency and accuracy of NLP models.

- Tokenize a given sentence into individual words.

- Remove stop words from a paragraph of text.

- Apply stemming to a list of words.

Sentiment Analysis

On the sentiment analysis front, NLP techniques are employed to determine the sentiment expressed in a piece of text, whether it is positive, negative, or neutral. Sentiment analysis is widely used in social media monitoring, customer feedback analysis, and market research to gauge public opinion and sentiment towards products, services, or events. It involves classifying text as emotionally positive, negative, or neutral based on the language used.

- Analyze the sentiment of a product review.

- Determine the overall sentiment of a Twitter post.

- Classify customer feedback as positive or negative.

On the topic of sentiment analysis, it is crucial to note that this technique has evolved significantly in recent years, with the integration of advanced machine learning algorithms and deep learning models. These advancements have improved the accuracy and granularity of sentiment analysis, enabling a more nuanced understanding of human emotions and opinions expressed in text data.

Language Models

Language models form the backbone of many NLP applications, enabling machines to generate human-like text and understand the structure of language better. These models are trained on vast amounts of text data to predict the likelihood of a word given its context, allowing them to complete sentences, generate responses, and even create original content. Language models have broad applications in machine translation, chatbots, and text generation tasks.

- Complete a given sentence with appropriate words.

- Generate a response to a customer query.

- Create a short story based on a prompt.

Language models have seen a significant boost in performance and capabilities with the development of transformer-based models like GPT-3 (Generative Pre-trained Transformer 3). These models have pushed the boundaries of what machines can accomplish in understanding and generating human language, paving the way for more advanced NLP applications and services in the future.

Computer Vision

For

1. "Explain the concept of Computer Vision."

2. "What are the applications of Computer Vision in real life?"

3. "How does Computer Vision work in autonomous driving?"

Image Processing

One

1. "Explain the steps involved in image processing."

2. "What are the different techniques used in image enhancement?"

3. "How does noise removal work in image processing?"

Object Detection

Detection

1. "How is object detection used in security surveillance?"

2. "Explain the role of deep learning in object detection."

3. "What are the challenges faced in real-time object detection?"

One of the key tasks in computer vision is object detection, which involves identifying objects within images or videos. Object detection has various applications, such as surveillance systems, autonomous vehicles, and image tagging in social media. This area of computer vision has seen significant advancements with the introduction of deep learning algorithms like convolutional neural networks (CNNs) that can effectively detect and classify objects within images.

Image Segmentation

Computer

1. "What are the different types of image segmentation techniques?"

2. "How is image segmentation used in medical imaging?"

3. "Explain the role of semantic segmentation in computer vision."

A

1. "What are the key challenges in image segmentation?"

2. "How does image segmentation differ from object detection?"

3. "Explain the concept of instance segmentation."

A deeper explore computer vision leads us to image segmentation, a technique that partitions an image into multiple segments to simplify the representation of the image. Image segmentation is crucial in various fields, including medical imaging for tumor detection and autonomous driving for identifying different objects on the road. Techniques like semantic segmentation help in understanding the context of different parts of an image, enabling machines to interpret visual data more effectively.

Model Deployment

Despite the intricate complexity of machine learning models, deploying them for real-world use is a critical step in the process. Model deployment involves making the trained model available for inference, where it can take input data and provide predictions or classifications. This is where the rubber meets the road, as they say, and where the potential of a machine learning model is truly realized.

1. Generate predictions on new data using the deployed model.

2. Monitor the model's performance in real-time.

3. Update and re-deploy models as needed for improved accuracy.

Model Serving

One of the key aspects of model deployment is model serving. This involves creating an interface through which the model can receive input data, perform its computations, and return the predictions or classifications. Model serving is crucial for integrating the machine learning model into existing systems or applications, allowing it to provide value in real-world scenarios.

1. Set up an API for the deployed model to interact with other software.

2. Scale the model serving infrastructure to handle varying loads.

3. Ensure security protocols are in place to protect the model and data.

Containerization

One of the popular methods for model deployment is containerization. By encapsulating the model, its dependencies, and the serving logic into a container, such as a Docker container, the deployment process becomes streamlined and portable. This allows for easy deployment across different environments without worrying about compatibility issues.

1. Package the model and dependencies into a Docker container.

2. Deploy the containerized model on various platforms like Kubernetes.

3. Facilitate reproducibility of the model environment for easy updates and maintenance.

Cloud Deployment

Serving machine learning models in the cloud is a common practice due to its scalability, flexibility, and cost-effectiveness. Cloud deployment allows for easy accessibility of models from anywhere, integration with other cloud services, and efficient utilization of resources. Platforms like AWS, Google Cloud, and Azure provide robust infrastructures for deploying and managing machine learning models in the cloud.

1. Leverage cloud services for deploying, scaling, and monitoring models.

2. Utilize serverless architectures for cost-effective and efficient deployment.

3. Implement auto-scaling to handle varying workloads and optimize resource usage.

For those new to machine learning, understanding the nuances of model deployment can be daunting. However, with the right approach and tools, deploying machine learning models can be a rewarding experience that unlocks the potential of your models in real-world applications.

1. How to deploy a machine learning model on AWS?

2. Best practices for monitoring model performance in production.

3. Steps to containerize a TensorFlow model for deployment.

Ethics in Machine Learning

All aspects of developing and deploying machine learning models come with ethical considerations. As AI systems become more prevalent in our daily lives, ensuring that these systems operate fairly and ethically is crucial. Several key areas are of particular importance in the ethical use of machine learning, including bias, fairness, transparency, explainability, and accountability.

- How can we ensure that machine learning models are not biased?

- What are some ethical implications of using AI in decision-making processes?

- Discuss the importance of fairness and transparency in machine learning algorithms.

Bias in Machine Learning

The presence of bias in machine learning algorithms is a significant concern. Biases can be unintentionally introduced through the data used to train these models, leading to discriminatory outcomes. Addressing bias in machine learning requires careful curation of training data, evaluation of model performance across different demographic groups, and the implementation of techniques to mitigate bias.

- How can bias in machine learning be identified and mitigated?

- Discuss the impact of biased algorithms on marginalized communities.

- What role does ethics play in addressing bias in AI technologies?

Fairness and Transparency

To ensure the ethical use of machine learning, fairness and transparency must be prioritized. Fairness involves ensuring that AI systems do not discriminate against individuals or groups based on protected attributes such as race or gender. Transparency, on the other hand, involves making AI systems understandable and interpretable to users, regulators, and other stakeholders.

- How can fairness be integrated into the development of machine learning models?

- Discuss the importance of transparency in AI decision-making processes.

- What are some challenges in achieving fairness and transparency in machine learning algorithms?

To further promote fairness and transparency in machine learning, it is vital to incorporate ethical guidelines into the development and deployment of AI systems. This includes implementing mechanisms for auditing and monitoring AI algorithms to detect and address potential biases or unfair outcomes. Additionally, fostering diverse and inclusive teams in AI development can help mitigate biases and improve the overall fairness of machine learning technologies.

Explainability and Accountability

Machine learning models often operate as “black boxes,” making it challenging to understand how they arrive at decisions or predictions. Ensuring explainability and accountability in AI is crucial for building trust and understanding in these systems. Explainable AI techniques aim to provide insights into how algorithms make decisions, while accountability involves establishing mechanisms for holding individuals and organizations responsible for the outcomes of AI systems.

- Discuss the importance of explainability in machine learning models.

- How can we ensure accountability in the use of AI technologies?

- What are some challenges in implementing explainable AI techniques?

For improved accountability and transparency in machine learning, organizations must establish clear guidelines for the ethical use of AI, including mechanisms for explaining decisions made by algorithms and processes for addressing instances of bias or discrimination. By prioritizing transparency and accountability, we can build more trustworthy and ethically sound AI systems for the future.

Machine learning technologies have the power to drive innovation and transform various industries, but their ethical implications cannot be overlooked. By considering aspects such as bias, fairness, transparency, explainability, and accountability in the development and deployment of AI systems, we can harness the potential of machine learning while upholding ethical standards and promoting responsible AI usage.

Real-World Applications

Your understanding of machine learning concepts can be greatly enhanced by exploring real-world applications where these technologies are making a significant impact. Let’s examine into some key areas where machine learning is being utilized to tackle complex problems and drive innovation. Below are examples of prompts related to various real-world applications of machine learning:

- How is machine learning used in healthcare?

- Explain the role of machine learning in diagnosing diseases.

- What are the applications of machine learning in personalized medicine?

- How does machine learning improve patient outcomes in healthcare?

Healthcare

Healthcare is a domain where machine learning is revolutionizing processes and outcomes. From personalized treatment plans to early disease detection, the applications of machine learning in healthcare are vast. For instance, predictive analytics can help identify patients at risk of certain medical conditions, allowing for early interventions and personalized care. Additionally, machine learning algorithms can analyze vast amounts of medical data to uncover patterns and trends that human experts might miss, leading to more accurate diagnoses and treatment recommendations.

Some prompts related to machine learning in healthcare are:

- How can machine learning assist in early disease detection?

- Explain the impact of machine learning on patient care outcomes.

- What are the challenges of implementing machine learning in healthcare?

- Describe the role of machine learning in drug discovery and development.

Finance

One of the most prominent applications of machine learning in finance is in algorithmic trading, where complex algorithms analyze market data to make investment decisions at speeds and volumes impossible for human traders. Machine learning is also used for fraud detection, risk management, and customer service in the financial industry. By analyzing historical data and real-time market trends, machine learning models can identify patterns that help financial institutions make more informed decisions and mitigate risks.

Some prompts related to machine learning in finance include:

- How does machine learning improve trading strategies in finance?

- Explain the role of machine learning in credit scoring systems.

- What are the benefits of using machine learning for fraud detection in finance?

- Describe the challenges of implementing machine learning in financial forecasting.

Retail

Plus, machine learning is transforming the retail industry by enabling personalized shopping experiences, inventory management optimization, and demand forecasting. By analyzing customer data and purchase histories, retailers can offer tailored product recommendations and promotions, enhancing customer satisfaction and loyalty. Furthermore, machine learning algorithms can analyze sales trends to optimize pricing strategies and inventory levels, ultimately improving operational efficiency and profitability.

Some prompts related to machine learning in retail are:

- How is machine learning used to personalize shopping experiences in retail?

- Explain the impact of machine learning on demand forecasting in the retail sector.

- What are the challenges of implementing machine learning in inventory management?

- Describe the role of machine learning in optimizing pricing strategies for retailers.

On a broader scale, machine learning is reshaping various industries by enhancing decision-making processes, optimizing operations, and improving customer experiences. As you examine deeper into the practical applications of machine learning, you’ll discover the immense potential these technologies hold in driving innovation and creating positive impacts across diverse sectors.

To wrap up

From above, it is clear that understanding the basics of machine learning is imperative in today’s increasingly technologically advanced world. Just like learning the foundation of any subject, grasping the fundamental concepts of machine learning sets a solid base for delving into more complex topics within the field. By familiarizing oneself with the terminology, algorithms, and applications of machine learning, individuals can begin to appreciate the significance of this innovative technology in various industries.

Machine learning, with its ability to analyze data and make predictions without explicit programming, offers a world of possibilities for problem-solving and decision-making. As we continue to integrate machine learning into our daily lives through technologies like virtual assistants, recommendation systems, and autonomous vehicles, having a basic understanding of how these systems work becomes increasingly valuable. By demystifying the workings of machine learning, we empower ourselves to not only consume these technologies but also contribute to their advancement and evolution.

As a final point, the journey into the world of machine learning begins with mastering the basics. By honing our comprehension of concepts like supervised learning, unsupervised learning, and neural networks, we pave the way for a deeper exploration of this exciting field. Whether you are a curious novice or a seasoned professional, a solid grasp of the fundamentals of machine learning opens doors to a realm of innovation and possibilities, shaping the future of technology and transforming the way we interact with the world around us.

Recent Comments